The pi3hat repository, in addition to hosting a library for sending raw CAN frames using the pi3hat, has long had example source code demonstrating how to use that to communicate with a moteus controller. Recently however, C++ bindings were officially added to the primary moteus repository, that provide a more consistent, flexible and complete interface to moteus controllers. I’ve gone ahead and updated the pi3hat library to take advantage of that new interface, so now the pi3hat can be treated as just another transport like the fdcanusb.

First, I’ll show how to use the pi3hat in the most minimal way, then describe a bit of how it works and more advanced uses.

Usage

There are two easy ways to include the pi3hat into your project, copying the sources, or using cmake.

Copying sources

First, the full source code for the moteus C++ library and pi3hat transport are included in each binary distribution in the ‘YYYYMMDD-pi3hat_tools-*.tar.bz2’ file. For source access, all the distributions are equivalent. Inside that tarball the directory foo/bar has the set of examples from https://github.com/mjbots/moteus/tree/main/lib/cpp/examples along with the necessary pi3hat source files and a Makefile that can be used to compile all the examples on a Raspberry Pi. In this method, you can just copy all the source files from that distribution into your project and re-copy when you wish to update to a newer version.

cmake

The second method is to use cmake with FetchContent. This works best with cmake 3.24 or newer, but 3.10 or newer is permitted:

include(FetchContent) FetchContent_Declare( pi3hat GIT_REPOSITORY https://github.com/mjbots/pi3hat.git GIT_TAG 00112233445566778899aabbccddeeff00112233 ) FetchContent_MakeAvailable(pi3hat) add_executable(myproject myproject.cc) target_link_libraries(myproject pi3hat::pi3hat)

Development

No matter how the library is incorporated, the API is basically the same. The only possible difference is that for cmake versions older than 3.24, or depending upon how you structure your build system, you may need to manually do:

#include "pi3hat_moteus_transport.h" // ... near the beginning of main() mjbots::pi3hat::Pi3HatMoteusFactory::Register();

After that, you can use the moteus C++ library in the exact same way as for the fdcanusb or other operating system level transports in simple cases. If you want to manually control pi3hat parameters, then the transport can be manually constructed, in a similar manner to that used in the python API:

using Transport = mjbots::pi3hat::Pi3HatMoteusTransport;

Transport::Options toptions;

toptions.can[4].slow_bitrate = 125000;

toptions.can[4].fdcan_frame = false;

toptions.can[4].bitrate_switch = false;

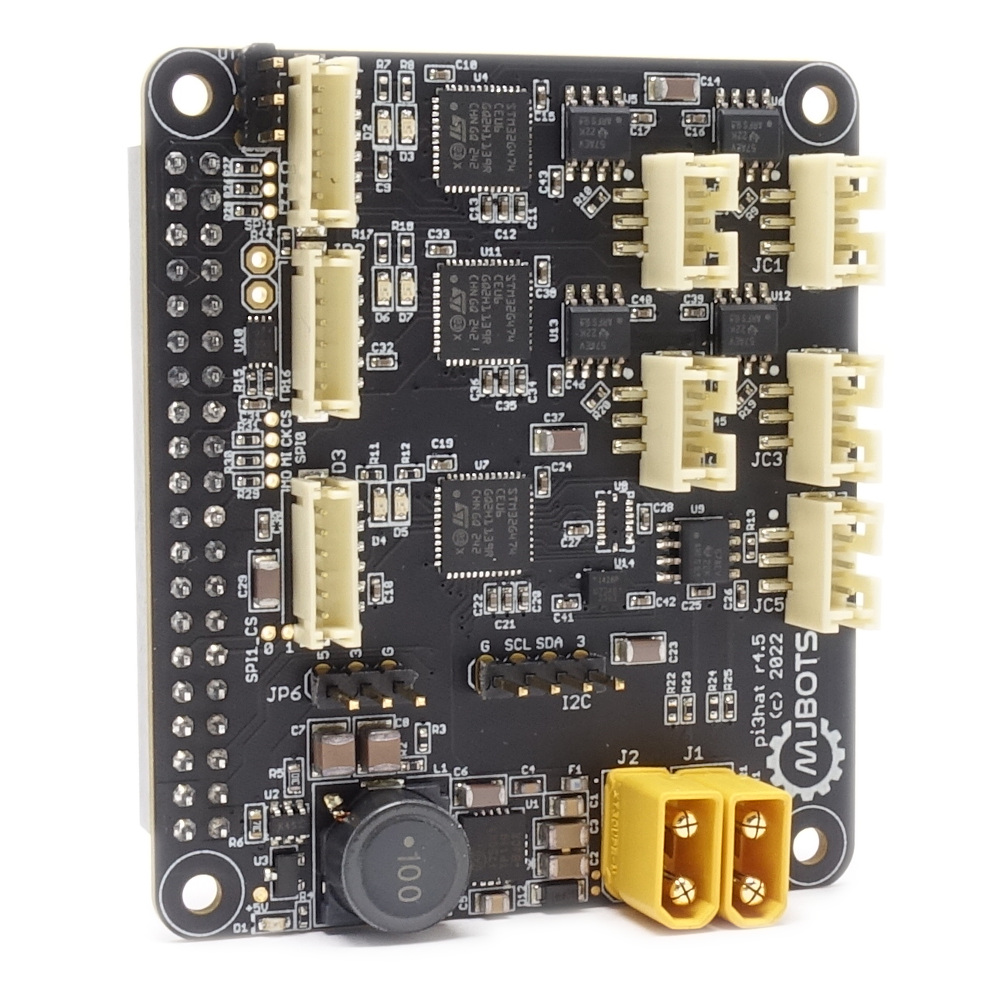

toptions.servo_map[1] = 1; // ID 1 is on JC1

toptions.servo_map[2] = 2; // ID 2 is on JC2

toptions.servo_map[3] = 2; // ID 3 is on JC2

auto transport = std::make_shared<Transport>(toptions);

mjbots::moteus::Controller controller([&]() {

mjbots::moteus::Controller::Options coptions;

coptions.transport = transport;

return coptions;

}());

In the above example, the pi3hat is configured with a fixed ID to bus mapping, and the CAN parameters for JC5 are set to be CAN 2.0 compatible.

Similarly, there exists a Cycle() overload of Pi3HatMoteusTransport that permits sending all the arguments that are available to Pi3Hat::Cycle, so you can use the moteus bindings, while still maintaining complete control over all the pi3hat specific features if you desire.

Conclusion

That’s all there is to it! No firmware updates are required for pi3hats or moteus controllers to use new API, you can just use the new API if you want to make your life easier!