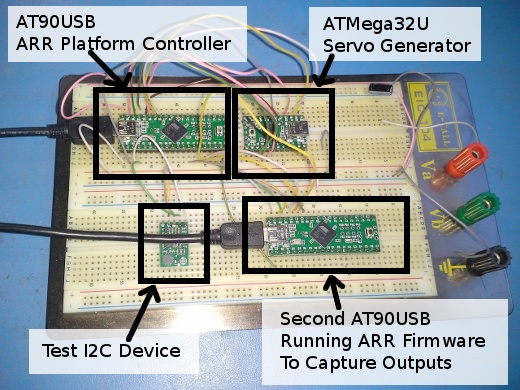

Autonomous Racing Rotorcraft: Initial Camera Exploration: System Image

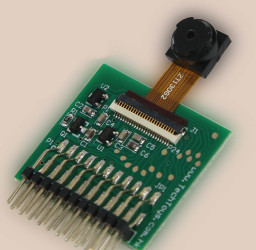

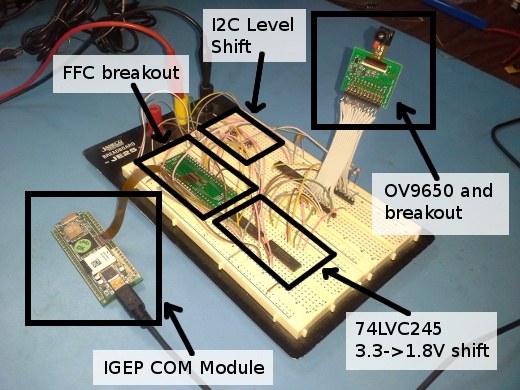

In my previous post I described how I wired up an OV9650 based camera module to my IGEP COM Module. The ultimate goal of course is a low cost reliable altimeter for my autonomous racing helicopter. When we left off, I had the camera breadboarded up connected to the IGEP COM Module and wanted to drive it over the I2C interface to verify it was working. While the IGEP COM Module’s camera I2C interface is easily exposed to the linux user space, the OV9650 doesn’t respond to I2C commands without a clock being present. Turning on the clock turned out to be quite a challenge.

First I explored the possibility of existing user space tools which might be able to poke directly into /dev/mem and activate the clock. Promisingly, I found omapconf, a user space tool which claimed to provide complete control over the clock systems of TI’s OMAP chips. Unfortunately, it only operated on OMAP4 and the unreleased OMAP5, not the OMAP3 that is in the IGEP COM Module. The source code wasn’t particularly helpful for quick inspection either. There is a lot of complexity in manipulating the processor’s clock registers, and implementing it from the datasheet didn’t seem like a particularly fruitful use of time. Thus discouraged, I moved on to the next possibility, kernel mode operations.

The linux kernel for the IGEP COM Module already had routines designed to manipulate the clock registers, so why not use those? Well, to start with, building a custom kernel (and suitable system image), for this board was hardly well documented. After much consternation, and then much patience, I ended up piecing together a build setup from various IGEP wiki pages that allowed me to mostly reproduce the image that came on my board. It consisted of the following steps:

-

Yocto: Is a tool used to creating embedded linux distributions. It is based on the OpenEmbeddeded recipe files, and includes reference layers for building a distribution.

git clone -b denzil git://git.yoctoproject.org/poky -

ISEE Layer: ISEE publishes a yocto layer for their boards which includes recipes for a few packages that don’t yet exist in yocto.

git clone -b denzil git://git.isee.biz/pub/scm/meta-isee.git

Once the relevant git trees were cloned, you still have to generate a build/directory using the “oe-init-build-env” script, and modify files in that directory to configure the build. The How to get the Poky Linux distribution wiki page on the ISEE website had some hints there as well.

With this configured, I was able to get a system image that closely matched the one that came with my board. In addition, I added a local layer to hold my modifications, then proceeded to switch to the as yet unrelease ISEE 3.6 kernel, which has support for the ISEE camera board. My supposition is that the OV9650 camera I’m working with is close enough to the mt9v034 on the CAM BIRD that I will run into many fewer problems using their kernel. For my local development, I cloned the ISEE kernel repository, and have pointed my local layer’s linux kernel recipe to the local git repository. This allows me to build local images with a custom patched kernel. Now, I am truly able to move on to the next step, of driving the clock!

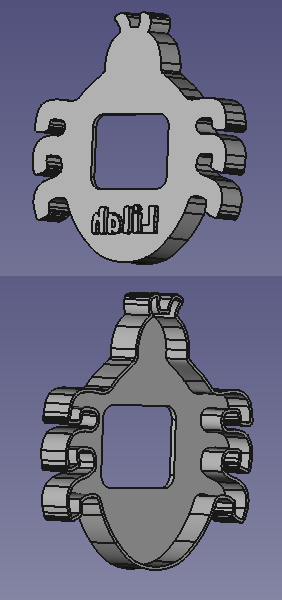

The toolchain I used could be applied to a number of 3D projects. First, I either found an image kind of resembling what I had in mind using google images, or drew up a sketch on a piece of paper. Then, I transcribed that image into an

The toolchain I used could be applied to a number of 3D projects. First, I either found an image kind of resembling what I had in mind using google images, or drew up a sketch on a piece of paper. Then, I transcribed that image into an  Next, I fired up

Next, I fired up